Hello!

I'm Selin! Machine Learning Engineer @ Seez and Computer Science Graduate @ UoWarwick.Scroll down to learn more!

Don't have a lot of time?

To learn more about me and what I do, click through these sections:

Contact Me

Education

2020-2023 | Coventry | University of Warwick - BSc in Computer Science

Bachelor of Science (with Honours) in Computer Science (2:1)

Additional (optional) modules completed: Computer Security (CS140), Functional Programming (CS141), Artificial Intelligence (CS255), Advanced Computer Architecture (CS257), Mobile Robotics (CS313), Neural Computing (CS331), Machine Learning (CS342), Principles of Programming Languages (CS349), Project Management for Computer Scientists (CS352)

Dissertation and projects done throughout the course can be found in the projects section

2018 - 2020 | London | Ashcroft Technology Academy - A-Levels

A-Levels: Mathematics (A*), Computer Science (A), Chemistry (A)

AS-Level: Biology (A)

Copper Award in the 2019 Cambridge Chemistry Challenge, C3L6

2016 - 2018 | Dubai | The English College - GCSEs

Combined Science (9-9), Mathematics (8), English Language (7), English Literature (7)

Geography (7), Business Studies (A), Art & Design (7)

Work Experience

Amazon | Software Engineer (Internship)

Jul 2022 - Nov 2022

As a part of the Scout delivery robot project, defined and developed a complex Python transformation logic to transform Robot Operating System (ROS) and telemetry data to visualize on a Perfetto dashboard.

Improved robot troubleshooting and root-cause analysis time by at least %50.

Return offer was rescinded following the layoffs and hiring freeze :(

Jul 2021 - Oct 2021

Developed a remote control application for Amazon Scout robots, allowing developers to deploy software and monitor/control it remotely.

Built a custom Raspberry Pi edge device; as a remote control hub connecting to robots in the testing field.

Developed a Python application providing an UI for developers to perform operations remotely on the robots.

Integrated the Raspberry Pi device with AWS IoT Core to capture device logs and monitor performance.

Projects

Below are a mixture of my personal projects and university projects.

AppGenPro | Python, AutoGen, OpenAI API

Advancing Person Re-ID with Deep Learning | Undergrad Project | Python, Pytorch, Pandas

Accent Detection: Navigating High-Dimensional Spaces | CS342 ML Project | Python, Pandas

Custom JavaCC Parser and Evaluator | CS259

Tautology Prover in Prolog | CS262

Intrusion Detection System | CS241 | C, pcap

Gig Scheduling System | CS258 | Java, SQL

Mentor Matching Application | CS261 Group Project | Python, Flask, React, JS

Conjugate Gradient Solver | CS257 | C

Optimizing Timetables with AI | CS255 | Python

UNIX CLI Editor | C

Large Arithmetic Collider | CS141 | Haskell

Scratch Clone | CS141 | Haskell

Robot Maze Navigator | CS118 | Java

WAFFLES | CS126 | Java

Autonomous Drone | A-Level Project

AppGenPro

Automatically create standard complex business solutions and apps by writing your high-level requirement and let AI-powered appgenpro to create fully functioning complex applications without touching a line of code!

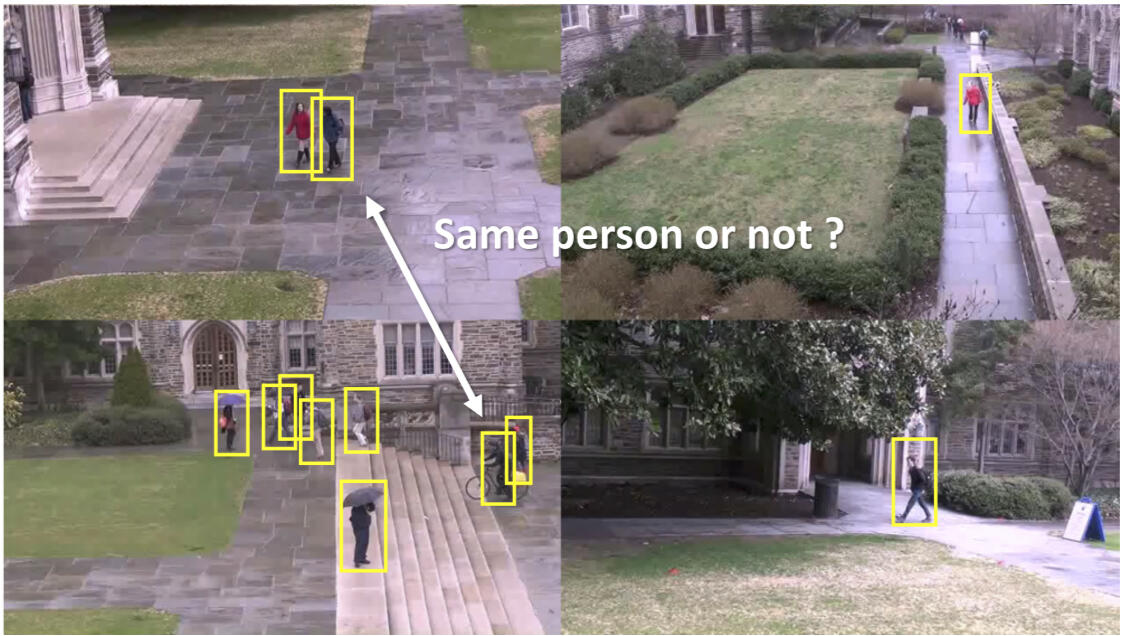

Advancing Person Re-ID with Deep Learning

In my undergraduate dissertation, I tackled the challenge of person re-identification (re-ID), a crucial technology for enhancing video surveillance, pedestrian tracking, and forensic analysis.My goal was to create a re-ID system that stood out for its accuracy and adaptability, capable of identifying individuals across different camera views under varying conditions.

I approached this task by designing a flexible re-ID model, treating the identification process as an instance retrieval problem. This perspective allowed me to incorporate few-shot classification techniques, aiming to build a system that learns effectively from limited data while maintaining robustness in diverse environments.

A significant part of my research focused on improving the model's generalization capabilities. I explored deep metric learning, aiming to refine the model's ability to manage variations in person appearances and dynamic settings.

Through experimenting with data augmentation, I introduced more variety into our supervised datasets.Moreover, by integrating a combination of triplet loss and cross-entropy loss with label smoothing, I enhanced the feature embedding process, which was pivotal for the model's performance.One of the most enlightening findings from my work was the effectiveness of unsupervised pre-training on a specialized person re-ID dataset over conventional pre-training methods like ImageNet. This approach proved to be a game-changer, significantly boosting the accuracy and robustness of the re-ID models, particularly in scenarios where labeled data was scarce.My evaluation of the system on the Market-1501 benchmark dataset demonstrated its effectiveness, achieving a rank-1 accuracy of 91% and a mean Average Precision (mAP) of 78.6%. These results were on par with or even superior to current state-of-the-art methods, validating the effectiveness of my approach.This project underscored the potential of leveraging deep learning, metric learning, and few-shot classification to enhance person re-identification systems. The high level of accuracy and robustness against environmental variations that I achieved highlights the success of my methodologies, including the strategic use of unsupervised pre-training and data augmentation.

Accent Detection: Navigating High-Dimensional Spaces

Key phases of the project included:

Initial Model: Building a multi-class perceptron model utilizing the dataset in its original feature space to establish a baseline for classification performance.

Linear PCA Application: Implementing linear PCA to define alternative feature spaces, then learning a multi-class perceptron model in each, aiming to enhance model accuracy by leveraging the reduced-dimensionality spaces.

Non-linear PCA Exploration: Advancing to non-linear PCA, applying the kernel trick to project the data into a higher-dimensional space for more distinct separation of accent classes.

Hyper-parameter Optimization: Conducting a grid search over a selected subset of the hyper-parameter space to fine-tune the kernel perceptron model, optimizing for maximum classification accuracy.

For my CS342 machine learning course, I focused on constructing a speaker accent classification model targeting accents from six countries. The project began with using Principal Component Analysis (PCA) to assess if the dataset was linearly separable, by mapping the first two principal components.This project highlighted the effectiveness of PCA for feature space exploration and the significance of choosing the right model and parameters in machine learning. Through systematic experimentation—from basic perceptron models to advanced kernel methods—I gained insights into the complexities of building and optimizing classification models for real-world data.

Custom Parser and Evaluator

JavaCC (Java Compiler Compiler) is a powerful parser generator for use with Java applications. It automates the process of converting a textual representation of programming languages (specified using a grammar) into a Java program capable of parsing that language. JavaCC simplifies the creation of parsers for custom languages by allowing developers to define grammars in an extended BNF notation, from which it generates code to parse the language. This tool is particularly suited for projects like ours, where understanding the syntactic structure of custom languages is crucial.

In the CS259 course, focusing on the principles of formal languages, I undertook a project to design and implement a parser and interpreter for a simplistic, integer-based custom programming language named PLM (Programming Language for Mathematics).The project harnessed the power of JavaCC and Gradle to facilitate the parsing of PLM syntax and evaluate its expressions, aiming to understand the underpinnings of language design, parsing, and execution.

The development process began with defining the grammar of PLM in a format compatible with JavaCC. This grammar included rules for parsing integer assignments, arithmetic expressions, and basic function definitions and calls. Once the grammar was specified, JavaCC was used to generate the parser code automatically.The next step involved implementing the interpreter in Java. The interpreter's role was to traverse the parsed syntax tree generated by the JavaCC parser, evaluate the expressions, and execute the statements as per the semantics of PLM. Special attention was given to correctly handling variable scopes, especially in the context of functions, to ensure the language's logical consistency.The successful implementation of the PLM parser and interpreter not only demonstrated the capabilities of JavaCC and Gradle but also deepened my understanding of the principles underpinning formal languages and compilers.

Tautology Prover in Prolog

Prolog, short for Programming in Logic, is a high-level programming language that is associated with artificial intelligence and computational linguistics. Unlike imperative languages that specify how to perform tasks, Prolog is declarative, focusing on what relationships and facts hold true. It operates on the principles of formal logic, making it an ideal tool for tasks that involve complex pattern matching, tree-based searches, and, notably, tasks requiring sophisticated deductive reasoning like tautology proving.

Resolution Method: An inference rule in propositional logic and first-order logic, Resolution is used to derive a contradiction from a set of clauses, thereby proving the original statement. In the context of this project, it was employed to simplify the logical expressions and identify contradictions.

Proof by Refutation: This technique involves assuming the negation of the statement to be proved and showing that this assumption leads to a contradiction. Hence, the original statement must be true (a tautology). In Prolog, this was realized through its natural backtracking and logical inference mechanisms, allowing the program to explore various possibilities until it either proves the statement or reaches a contradiction.

The core of this project was to develop a prover that could ascertain whether a given logical statement is a tautology. This was achieved by implementing the Resolution method alongside proof by refutation in Prolog.One significant challenge was encoding the logical statements and the rules of inference in a way that Prolog could effectively process. This required a deep understanding of both the syntax and semantics of Prolog as well as the logical foundations underpinning the Resolution method and proof by refutation. By iteratively refining the representations and the inference rules, I was able to develop a robust system that could accurately identify tautologies.Another challenge involved optimizing the proof process to handle complex or nested logical statements efficiently. Through careful structuring of the Prolog rules and leveraging Prolog's inherent strengths in logical deduction, I enhanced the prover's efficiency, reducing the computational complexity of the proof process.

Intrusion Detection System

A multi-threaded intrusion detection system in C using the pcap library, adept at sniffing packets to identify common cyber threats like SYN flood attacks, ARP cache poisoning, and blacklisted URL requests.

Gig Scheduling System

Designed the schema for a database to store information about gigs and artists and wrote queries, stored procedures and views to interact with the database according to a specification. Written in SQL.

Mentor Matching Application

Group project sponsored by Deutch bank to create a mentorship application for matching employees across the organization to support the growth of the mentoring culture and accelerate employee engagement.

Conjugate Gradient Solver

Optimizing ACACGS (a conjugate gradient proxy application for a 3D mesh) using a range of techniques from compiler optimizations, OpenMP pragmas (multithreading), to AVX2 Intrinsics for vectorization of certain code snippets.

Optimizing Timetables with AI

An intelligent timetable scheduler using constraint satisfaction problem (CSP) algorithms like simulated annealing and backtracking with MRV and LCV heuristics, ensuring optimal event scheduling amidst complex constraints.

CLI Editor

A UNIX command-line editor from my first-year computer architecture module. It allows for file and line operations, such as creation, editing, and deletion, within the working directory. A notable feature includes a spell-check function, activated with ctrl-f, which highlights misspellings in real-time.

Large Arithmetic Collider

Developed a Haskell-based solver for the Large Arithmetic Collider (LAC), a complex numerical puzzle game, showcasing algorithmic problem-solving and functional programming prowess.

Scratch Clone

Crafted an interpreter in Haskell for simple Scratch programs, enabling evaluation of program results or identification of errors.

Robot Maze Navigator

Algorithm Implementation: Initially employed random movement, then advanced to systematic exploration techniques including depth-first and breadth-first search to navigate the mazes.

Cycle Checking: Integrated cycle detection to prevent the robot from revisiting previously explored paths, optimizing the exploration process.

Goal-Directed Navigation: Utilized the knowledge of the goal's location in the maze's later stages to implement efficient pathfinding algorithms, significantly reducing the time and steps needed to reach the goal.

Java Programming: This project served as a deep dive into Java, enhancing my understanding of object-oriented programming, interfaces, and algorithmic thinking.

In my first year of computer science studies (CS118: Programming for Computer Scientists), I encountered the Robot Maze project—a practical, hands-on introduction to Java programming. This project tasked me with crafting a set of controllers to guide a robot through various mazes, employing algorithms of escalating complexity to efficiently reach the goal.The aim was to progressively enhance the robot's navigation capabilities, starting from simple, random exploration to implementing advanced pathfinding strategies such as depth-first and breadth-first search, cycle checking, and goal-directed shortest path algorithms.This project was an invaluable introduction to Java and algorithmic thinking, laying a solid foundation for my programming skills. It highlighted the importance of a methodical approach to problem-solving and the effectiveness of algorithms in navigating complex problems.

WAFFLES

In the course CS126: Data Structures and Algorithms, I was tasked with developing WAFFLES (Warwick's Amazing Fast Food Logistic Engagement Service), a back-end system designed to manage a restaurant review site efficiently.The goal was to create a robust system that could add, sort, and retrieve restaurant and review data effectively. A significant challenge was dealing with the influx of bad or invalid data, necessitating a design that could identify, correct, or discard such data without compromising the system's performance.This project was a pivotal moment in my education, where theoretical knowledge from data structures and algorithms was applied to a real-world problem. It reinforced the importance of choosing the right data structures and the impact of algorithm efficiency on a system's overall performance.

Features:

Custom Data Structures: Implemented manual hash maps and binary trees to manage the site's data efficiently, optimizing for quick searches, additions, and deletions.

Data Validation: Developed algorithms to validate and clean incoming data, ensuring the integrity and reliability of the stored information.

Efficient Sorting and Retrieval: Created sorting algorithms tailored to the needs of the service, allowing for fast organization and retrieval of restaurant and review data based on various criteria.

Scalability and Performance: Focused on scalability and performance from the outset, ensuring that the system could handle large volumes of data and user requests simultaneously without significant delays.

Autonomous Drone

In this high school project, I built and programmed a 250 quadcopter drone for both manual control and autonomous object detection, using a Raspberry Pi model 3 B+ and MobileNet SSD architecture. The drone's key feature is its ability to identify objects and provide relevant information about them.